On June 13, the DeepFake Detection Challenge (DFDC), launched by Facebook, Microsoft, Amazon, MIT and other well-known companies and universities jointly, announced the final results. The team “\WM/”, led by Prof. YU Nenghai and Prof. ZHANG Weiming from the Department of Cyberspace Security, University of Science and Technology of China (USTC), won the 2nd Prize (rewarding $300,000) out of 2265 participants, which was the best performer among the domestic teams.

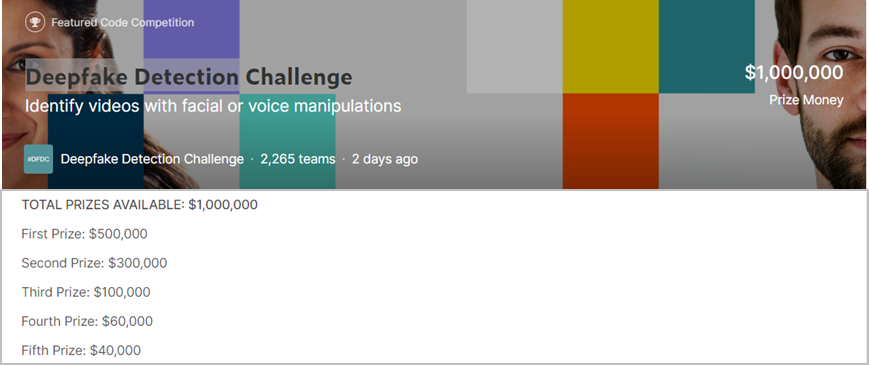

A snapshot of the DFDC website

DeepFake, or face forgery, is a novel technique emerging in recent years, which can simulate and fake videos through deep learning algorithms. Different from needing complex processes based on computer graphics in the traditional face-forgery technology, DeepFake has greatly cut down the technical threshold thanks to the rapid development of artificial intelligence (AI). This type of technique has spawned numerous entertainment-oriented face-forgery applications such as ZAO and Impressions. However, the prevalence of this technique also brings about great potential risks, especially in the fields of politics, finance, and public security. For example, if malicious forgery occurred in identity forgery or public opinion incitement, it would do tremendous damage to the country and society. Therefore, it is necessary to develop effective faked-face detection technology around the world. Under this circumstance, Facebook, Microsoft, Amazon and other international Internet tycoons released the DeepFake Detection Challenge on the Kaggle competition platform with a total prize money of up to 1 million US dollars to reward the top 5 teams, which also made DFDC one of the highest prizes on the Kaggle platform. Since the organizers have great influence in many fields and DeepFake research is heating up, the competition received widespread attention. 2265 teams from different universities and enterprises participated and submitted over 35,000 test models.

DFDC released by far the largest faked-face video data set. The data set contained more than 110,000 faked-face videos processed by a variety of face forgery and expression manipulation algorithms based on DeepFake, face2face and some other methods. The participating teams used this data set to train detection models. The competition additionally provided two online non-public data sets: one for public test (containing 4,000 videos) and the other for private test (containing 10,000 videos). The two data sets were used for verifying algorithm performance and evaluating the final score, respectively. Each participating team was finally ranked by the output accuracy of the detection model on the private test data.

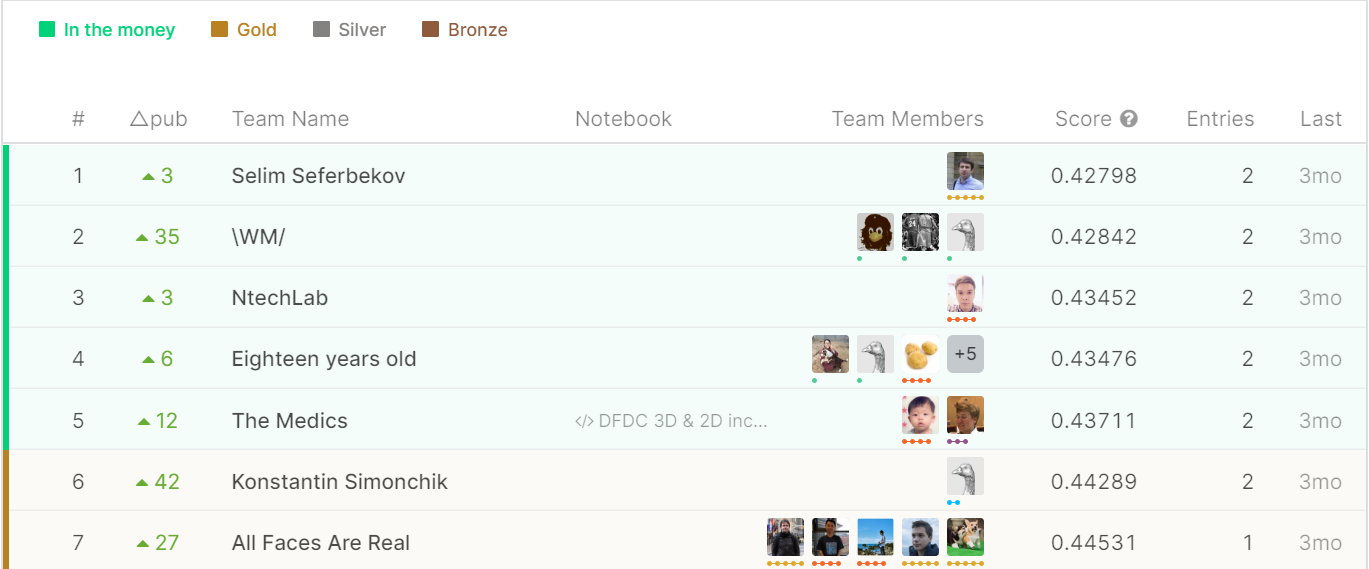

The final standings of the competition

The game lasted three and a half months. After continuous simulation and algorithm improvement, the \WM/ team from USTC finally achieved the second prize in the world (only 0.0005 points away from the first place). The team consists of a post-doctor, a PhD student and a master student from the Information Processing Center of the Department of Cyberspace Security. The Information Processing Center of USTC was donated by Mr. SHAO Yifu, a famous Hong Kong industrialist. It was founded in 1989 and has outstanding achievements in scientific research and talent development in the fields of multimedia and AI.

The \WM/ team’s advisors Prof. YU Nenghai and Prof. ZHANG Weiming have long been engaged in multimedia and AI security research. Their research group is affiliated to the Information Processing Center of USTC, and has made a series of important achievements in the direction of anti-sample, deep learning model protection, deep forgery and detection. Its members have published many high-level papers in the top associations like CVPR, AAAI and ICCV. And they won the championship of the defensive competition in the AI algorithm competition of 2019 AI top association IJCAI-Alibaba. This time, the excellent result obtained in DFDC is an important breakthrough for USTC in the field of AI security.

(Written by ZHAO Xiaona, edited by JIANG Pengcen, USTC News Center)